Amazon AWS with a 33% cloud computing market share is now producing Artificial Intelligence chips at a 40% discount to the $30,000 it pays for Nvidia’s fastest AI chip.

As the AI boom accelerated, most companies have been completely reliant on Nvidia for state-of-the-arts 4-naometer chips. Amazon ordered 2 million Nvidia H100 80 GB GPU chips, but has only received 20,000 in 2022 and about 50,000 in 2023.

With AI demand going through the roof, Nvidia with a with 90 percent market share has not been willing to discount datacenter chips that have over 50 percent profit margins.

In the hopes of being able to eventually compete against head-to-head against Nvidia, Amazon Web Services paid $350 million in 2015 to buy a start-up chip designer named Annapurna in Austin, Texas.

After years of development, the Amazon will release ‘Trainium 2’ as the semiconductor industry’s second real hyperscaler chip. Trainium 2 is already being tested by Anthropic—the OpenAI competitor that raised $4 billion from an Amazon consortium that included Databricks, Deutsche Telekom, and Japan’s Ricoh and Stockmark.

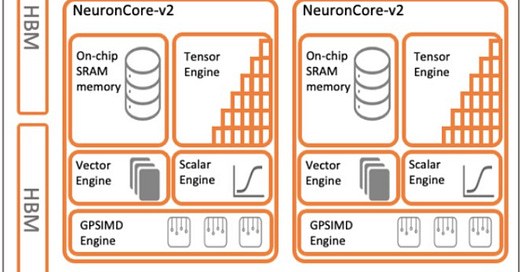

According to Next Generation, AWS began successfully making its own DPUs (data processing units) in 2017, then decided to go old-school and vertically integrate. Amazon produced severalgenerations of its Nitro DPUs, four generations of Graviton Arm server CPUs, two generations of Inferentia AI inference accelerators, and now a second generation of Trainium AI training accelerators.

The Trainium2 chip was first revealed alongside the Graviton4 server CPU in Las Vegas at Invent 2023 in Las Vegas. After extensive testing, Annapurna is set to release Trainium 2 to its AWS customers in December.

Amazon AWS capital spending was $48.4 billion in 2023, and the company expects to spend $75 billion on CapEx in 2024growing its infrastructure to support AI services.

The Artificial Intelligence industry demand is so hot, AWS intends to continue to buy the rest of the 2 million Nvidia chips on order, despite the massive price differential. Nvidia’s biggest other customers, Microsoft and Meta, are also designing their own data center chips in response to AI growth.